What is "robots.txt blocked" problem on Search Console:

The robots.txt blocked problem is that google trie to crawl a web page on your site, but your robots.txt file is not allow or blocking it.

The robots.txt blocked problem is that google trie to crawl a web page on your site, but your robots.txt file is not allow or blocking it.

Why it is happen?

It happen because your robots.txt file has a rule like disallow, that prevent crawlers from accessing a page on your website.

It happen because your robots.txt file has a rule like disallow, that prevent crawlers from accessing a page on your website.

To resolve this problem follow the following steps:

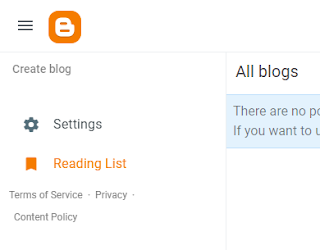

1. Go to settings in your blogger website.

2. Now go to custom robots.txt and click on it.

3. You will see robots.txt like this:

User-agent: *

Disallow: /search

Disallow: /category/

Disallow: /tag/

Allow: /

Disallow: /*?m=1

Sitemap: https://(your website url will be show here)

4. Now change Disallow: /search into Allow: /search

5. Click on save option

6. Now go to search console and click on pages.

7. Find robots.txt error, click on it.

8. Here you will find a validate option.

9. Click on validate option.

10. Now wait for some days, your problem will be shortout.

1. Go to settings in your blogger website.

2. Now go to custom robots.txt and click on it.

3. You will see robots.txt like this:

User-agent: *

Disallow: /search

Disallow: /category/

Disallow: /tag/

Allow: /

Disallow: /*?m=1

Sitemap: https://(your website url will be show here)

4. Now change Disallow: /search into Allow: /search

5. Click on save option

6. Now go to search console and click on pages.

7. Find robots.txt error, click on it.

8. Here you will find a validate option.

9. Click on validate option.

10. Now wait for some days, your problem will be shortout.